In recent years, we have seen a spectacular transformation in industry, with a growing number of companies that embrace artificial intelligence in general, and Deep Learning in particular, as a key piece of their production strategies.

This technological revolution has been driven by a combination of factors that have made these techniques an indispensable element for the improvement of industrial production lines.

Automatic learning and Deep learning

Machine Learning is a fundamental branch of Artificial Intelligence that allows computer systems to improve their actions in a specific task as they gain experience. Its essence lies in the ability of these algorithms to learn patterns and make decisions without being explicitly programmed, based on data and previous experience.

Deep Learning is a subset of Machine Learning that is based on deep neural networks to model and solve complex problems. Unlike traditional machine learning, which requires manual feature extraction, Deep Learning allows algorithms to automatically learn feature hierarchies from data.

The rise of Deep Learning occurred in the middle of the last decade fed by several key factors that converged:

- Deep neural networks require huge amounts of calculations to be trained. The substantial increase in the processing capacity of graphical processing units (GPUs) provided the necessary infrastructure to perform these tasks efficiently.

- The availability of massive data sets, especially with labeled images, allowed learning in richer and more general ways.

- The introduction of new and more efficient architectures, such as convolutional neural networks (CNNs) specialized in image processing, significantly improved performance in specific tasks.

To date, Deep Learning has continued to evolve and remain a driving force in many fields, among which the Artificial Vision stands out. It has therefore become a very attractive technology for process automation and online production analysis.

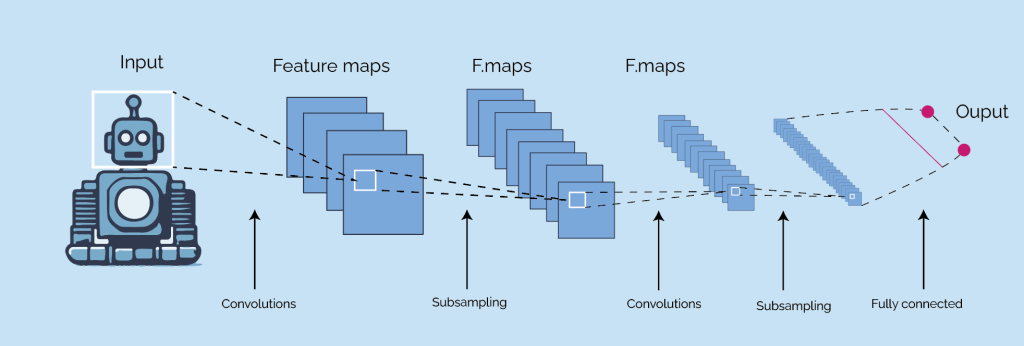

Another key factor that has contributed significantly to the exceptional performance of Deep Learning in image tasks is the use of convolutional neural networks (CNN). CNNs are designed to deal with images and, by applying filters (convolutions) to small regions of the image, are able to extract patterns such as edges, textures, shapes and other important features to understand complex visual content. The more the network is deepened, the more complex the extracted features are. Once all filters have been applied, the resulting characteristics are introduced into a conventional neural network that provides the final result.

Aplications

Images classification

Image classification is a task in which the model must attribute a label or category to a given image. CNNs are trained to recognize patterns and characteristics that allow different classes to be differentiated. For example, a model could be trained to recognize cats and dogs, and then classify new images according to these categories.

Image classification is a key application for quality control and product identification processes since, among other tasks, it allows to automatically classify different products or parts and detect anomalies or defects.

Objects detection

Object detection involves locating and identifying multiple objects within an image. Unlike image classification, here the model not only identifies what is in the image, but also delimits the positions of the objects detected with a rectangle that frames them. In the industrial field, object detection can be applied to count, locate and follow the location of products.

Detection of objects with artificial vision

Images segmentation

Image segmentation goes beyond object detection and attributes labels to each pixel of the image, dividing it into different regions with specific meanings. There are two different types of segmentation according to their purpose:

- Semantic segmentation aims to attribute a semantic label (a category) to each pixel of the image. This means that, for each contiguous region with similar visual characteristics, the same label is assigned. For example; if we are segmenting an image with a car and a traffic light, semantic segmentation would highlight all pixels that belong to the car with a label and that of the traffic light with another label.

- Instance segmentation goes beyond semantic segmentation, as it aims to identify and differentiate each object individually in an image. In this case, not only do we assign semantic labels, but we also assign a single label to each specific object. This allows you to distinguish similar objects that belong to the same category. In the industrial field, the segmentation of instances is by far the most useful; it allows to identify the entire area of a product, specific details or critical areas of it.

FAQs

What is Deep Learning and how is it integrated into an Artificial Vision system?

Deep Learning is a machine learning technique that uses deep neural networks to learn complex patterns from data. In the context of Artificial Vision, Deep Learning is usually integrated through the use of convolutional neural networks (CNN). These networks can automatically learn feature hierarchies in images, allowing object detection, classification and other visual tasks to be fully automatic. The integration of Deep Learning in Artificial Vision systems improves the ability of the system to interpret and understand images more precisely and efficiently. This has driven significant advances in quality control or predictive maintenance applications.

How can Deep Learning improve the quality of industrial products?

Can I incorporate an Artificial Vision system with Deep Learning in any production line?

In general, the incorporation of Deep Learning is possible when relevant information is visible to the naked eye or can be captured by cameras. This technology is especially effective for tasks such as defect detection, product classification or quality control. Deep Learning is particularly powerful when faced with complex problems with a large amount of data. If the problem to be addressed is not very complex or a large amount of data cannot be available then it may be more convenient to use other simpler techniques.

What types of data are necessary for the effective training of deep learning models in an industrial environment?

The type of data may vary depending on the specific application, but in general, labeled data sets (mainly images) are required that reflect the diversity and possible variations in environmental conditions and industrial operations. It is important to note that the more images they have, the more effective the training of the model will be and the better the task for which it has been trained. Depending on the complexity of the problem, they can take from a few hundred to tens of thousands of images.